Hey y’all! Long time no blog! I want to start talking about encapsulated inequality, but first let’s do some housekeeping.

Where have I been?

I have been blogging, just not here (I’ve been keeping the blog fires going over at 24×7 IT Connection). I’ve been working and travelling. I fell and hit my head in Barcelona, and that set off some other health issues. I’m fine (and lucky!), it did slow me down a bit. Funny how the universe finds a way to make us do that isn’t it?

I have a lot to talk about, so I’m going to commit to doing that here instead of just Twitter. I also need to import my old blog posts into this WordPress instance. If anyone out there wants to barter some database help for writing, delicious jam, or something like that shoot me an email!

I want to use my blog space to talk about tracking what seems to be inevitable: encapsulating inequality in digital formats. There is so much proof that this is happening, just to wrap my head around it I want to start recording examples. Right now to me it seems inevitable, perhaps by recording it we can find ways to fight this trend.

Encapsulated Inequality

One of the best sources I’ve read so far about this is a book titled Automated Inequality by Virginia Eubanks. The book looks at how the automation of social systems that are supposed to provide a safety net to those in need, things like health insurance, homelessness, and child welfare systems, have led to people being denied services because of seemingly arbitrary decisions made by algorithms. I really recommend reading this book.

This book is what made me think of the term encapsulating inequality. I grew up poor, and until I graduated from college I was on some sort of public support. Navigating the maze was insane, and to receive benefits you gave up any expectation of privacy. Even after you gave them documentation about every angle of your life (from paychecks to lease agreements to your kids’ report cards), you learned pretty early to keep every scrap of paper they sent you. God help you if something went awry and you didn’t have documentation, or worse yet if you couldn’t find an agency worker that understood the back end of the system and could help you untangle things..

As Virginia Eubanks documents in her book, when state legislatures decided to eliminate waste from these social systems, they relied on algorithms – really decision trees – to replace social workers. These systems encapsulated inequality in the name cutting waste. The book connects the poor houses of the past with what’s going on now, and it all belies our country’s hatred of the poor.

Encapsulated inequality is taking the current systems we have, complete with existing bias against the poor, people of color, and disabled (aka anyone who is OTHER), and force fitting them into algorithms and ill-advised machine learning under the guise of creating a data driven system. Doing it this way, instead of building new system from the ground up with a diverse group of data scientists, will encapsulate bias that will impact our children’s children.

As you can imagine, this topic hits very close to home for me. I’ve been mulling about it for weeks, and it’s why I want to start this series on encapsulated inequality.

The Army is creating combat robots

This post came across my Twitter feed (thank you Simon Wardley for quote tweeting Vinay Gupta). It connects the potential nullification of 2nd amendment rights with the rise of combat robots, using the conflict in Iraq as an example. It is a very insightful essay, for example this snippit:

It is time to insist that the military does not learn how to defeat armed civilian populations. We must exert political control over the military to prevent them developing these capabilities, or in some future scenario, these capabilities will be turned against us, and we will have no choice in the matter any more.

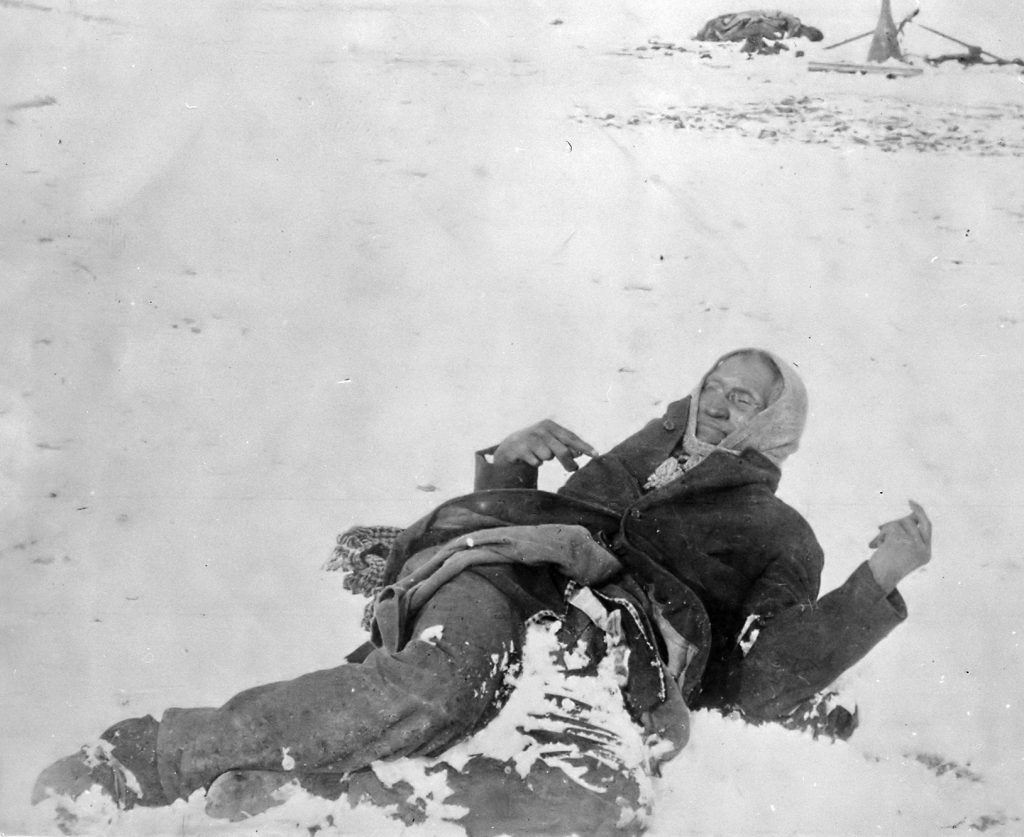

As I read this, I couldn’t help but think of history and how the army has used new tech to defeat armed civilian populations on US soil. In fact, the Wounded Knee Massacre used new military tech (Hotchkiss guns) to mow down Indian people as they realized they were under attack, and went to retrieve their confiscated weapons.

This has happened before, we are just digitizing the warfare to make it even less traumatic to the men and women driving the killing by automating the messy bits.

Automated Inference on Criminality Using Face Images

This is the actual title of a paper that looked at using

machine learning to detect features of the human face that are associated with “criminality” (thank you Catherine Flick for retweeting Prof Tom Crick). The idea was to be able to apply an algorithm to mug shots to determine, based on facial features, who was the criminal and who was not. The blog “Callingbullshit.org” (new fav!) wrote a post on the history of doing this sort of thing, and the dangers of encapsulating this sort of inequality.

This thread highlights the obvious bias:

We need more sunshine on this

We need more sunshine on this topic. Technologists especially need to talk about what is going on, and vigorously debate the implications for society. I plan to do my part by documenting and collecting the stories that I come across.

What do you think we can do? Let me know in the comments!